Publications

Research in Human-Centered AI, Accessibility, and Social Computing

13 results

Contextual Scaffolding and Self-Efficacy: Supporting Computer Skill Development among Blind Learners in India

ACM CHI • April 2026

Akshay Kolgar Nayak, Yash Prakash, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

Through a four-month contextual inquiry at two computer training centers serving 94 blind or visually impaired (BVI) learners in India, we identify rigid, experience-driven instruction and a visually centered curriculum that can undermine learners’ self-efficacy. We argue for moving beyond functional accessibility toward culturally responsive computing pedagogy, supported by locally adaptable contextual scaffolds tailored to resource-constrained, multicultural settings.

Micro-Behavioral Analysis of Online Shopping Patterns for Blind Users

ACM CHIIR • March 2026

Yash Prakash, Akshay Kolgar Nayak, Nithiya Venkatraman, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

While online shopping platforms provide convenience and autonomy to blind users, their non-visual interactions remain underexplored at a micro-behavioral level. We report a longitudinal study with 25 blind participants, combining keyboard activity and screen reader logs across familiar and unfamiliar e-commerce websites with interviews. We show how cognitive maps and shortcut routines formed on familiar sites streamline navigation, while unfamiliar sites increase navigation entropy, shortcut failures, and exploratory behavior as users rebuild mental models. We discuss design considerations for assistive technologies and e-commerce platforms to improve non-visual shopping.

VoxVista: Enhancing Screen Reading Experience for Online User Comments

ACM CHIIR • March 2026

Yash Prakash, Akshay Kolgar Nayak, Shoaib Alyaan, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

Blind users often experience online comment threads as long, monotone narration that increases fatigue and makes it harder to track conversational flow and emotional intent. VoxVista introduces an LLM-driven multi-voice framework that assigns personalized voice profiles to posts using a custom voice-preference dataset, creating more expressive and context-aware narration. A study with 20 blind participants shows improved engagement, comprehension, and willingness to continue listening to longer discussions.

Examining Inclusive Computing Education for Blind Students in India

ACM SIGCSE TS '26 • February 2026

Akshay Kolgar Nayak, Yash Prakash, Md Javedul Ferdous, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

We examine the state of inclusive computing education for blind and visually impaired (BVI) students in India, a resource-constrained Global South context. Through an interview study with 15 BVI students, instructors, and professionals, we identify key challenges including inaccessible instructional materials, heavy reliance on peer support, and the cognitive burden of simultaneously learning computing concepts and screen readers. Our findings reveal gaps in curriculum and instructor training, which often confines BVI individuals to basic, non-developer job roles. We provide recommendations to restructure curricula and propose self-learning assistive tools to foster more equitable and accessible computing education.

Insights in Adaptation: Examining Self-reflection Strategies of Job Seekers with Visual Impairments in India

ACM CSCW • October 2025

Akshay Kolgar Nayak, Yash Prakash, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

We present a study on self-reflection strategies among blind and visually impaired (BVI) job seekers in India. Despite gaining digital skills, many face challenges aligning with industry expectations due to limited personalized feedback and inaccessible job-prep tools. Self-reflection is often a social process shaped by peer interactions, yet current systems lack the tailored support needed for effective growth. Our findings inform the design of future tools to better guide reflective job-seeking and address the unique needs of BVI individuals in the Global South.

Understanding Online Discussion Experiences of Blind Screen Reader Users

IJHCI • 2025

Md Javedul Ferdous, Akshay Kolgar Nayak, Yash Prakash, Nithiya Venkatraman, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

Online discussion platforms are vital for socializing and information exchange, yet blind screen reader users' conversational experiences remain largely unexplored. Through semi-structured interviews with blind participants active on Reddit, Facebook, and YouTube, we uncovered critical challenges including difficulty joining ongoing conversations, tracking replies to their posts, and comprehending context-dependent content. Participants expressed needs for text standardization, sub-thread summarization, and sub-conversation navigation links. They preferred longer context-rich posts and hierarchical organization over linear presentation. We discuss LLM-driven design solutions including semantic conversation disentanglement using chain-of-thought prompting, dynamic voice profiling for different posts, and intelligent summarization features to reduce cognitive load and enhance participation in online discussions.

AccessMenu: Enhancing Usability of Online Restaurant Menus for Screen Reader Users

ACM Web4All • April 2025

Nithiya Venkatraman*, Akshay Kolgar Nayak*, Suyog Dahal, Yash Prakash, Hae-Na Lee, Vikas Ashok

Visual restaurant menus in PDF and image formats create substantial barriers for blind screen reader users ordering food online. An interview study with 12 BVI participants revealed that current OCR tools produce illogically ordered outputs, contextual hallucinations, and legend misinterpretations. AccessMenu addresses these issues through a browser extension leveraging GPT-4o-mini with custom Chain-of-Thought prompting to extract menu content (0.80 Entity F1) and re-render it in linearly navigable HTML. The system supports natural language queries for efficient menu filtering. Evaluation with 10 blind participants demonstrated significant improvements in usability and reduced task workload versus JAWS OCR, with participants covering twice as many menu items.

Best Paper Award

Adapting Online Customer Reviews for Blind Users: A Case Study of Restaurant Reviews

ACM Web4All • April 2025

Mohan Sunkara, Akshay Kolgar Nayak, Sandeep Kalari, Yash Prakash, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

We present QuickCue, an assistive browser extension that improves the usability of online restaurant reviews for blind screen reader users. QuickCue restructures review content into a hierarchical format organized by aspects (e.g., food, service, ambiance) and sentiment (positive/negative), enabling faster, more focused exploration with minimal navigation. Powered by GPT-4, it performs aspect-sentiment classification and generates targeted summaries, significantly reducing listening fatigue and helping users make more informed decisions.

Improving Usability of Data Charts in Multimodal Documents for Low Vision Users

ACM ICMI • November 2024

Yash Prakash, Akshay Kolgar Nayak, Shoaib Mohammed Alyaan, Pathan Aseef Khan, Hae-Na Lee, Vikas Ashok

Multimodal documents pairing charts with text create significant challenges for low vision screen magnifier users on smartphones, who struggle to mentally associate spatially separated information due to limited viewport and constant panning. Following a formative study with 10 low vision participants revealing key requirements, ChartSync transforms static charts into interactive slideshows featuring magnified views of salient data point combinations identified through LLaMA with Chain-of-Thought and ReAct prompting. Each slide includes tailored voice narration addressing the split-attention effect. Evaluation with 12 participants demonstrated significant improvements in task completion time, comprehension, and reduced cognitive load compared to standard screen magnifiers and existing solutions.

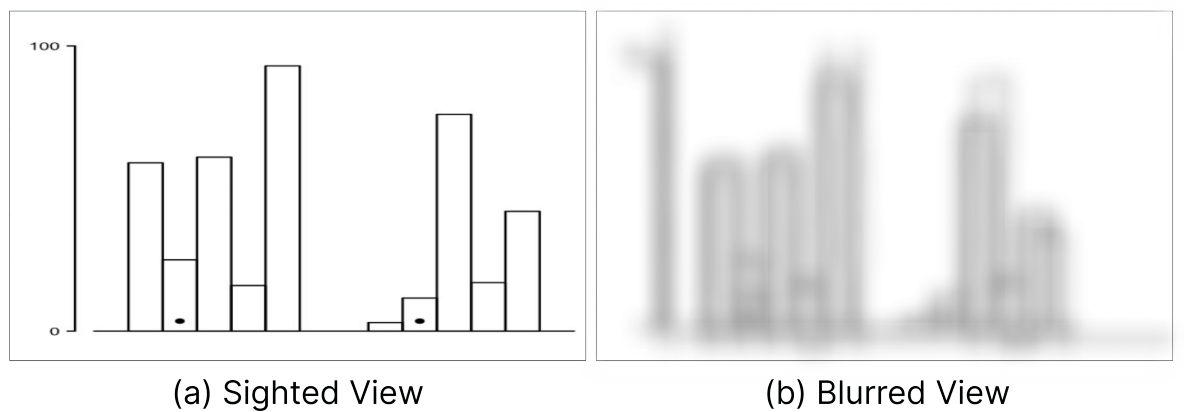

Understanding Low Vision Graphical Perception of Bar Charts

ACM ASSETS • October 2024

Yash Prakash, Akshay Kolgar Nayak, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

Bar charts are ubiquitous for data representation, yet how low-vision screen magnifier users perceive them remains unexplored. Through four controlled experiments with 25 low-vision participants using a custom screen magnifier logger capturing zooming and panning behaviors, this study reveals critical differences from sighted user perception. Findings show low-vision users invest substantial effort counteracting blurring and contrast effects, with tall distractors significantly elevating error rates contrary to sighted user studies. Adjacent bars within single-column stacks prove harder to interpret than separated bars for some participants, while the "blurring effect" causes systematic height estimation errors. These insights inform future chart design guidelines accommodating low-vision needs.

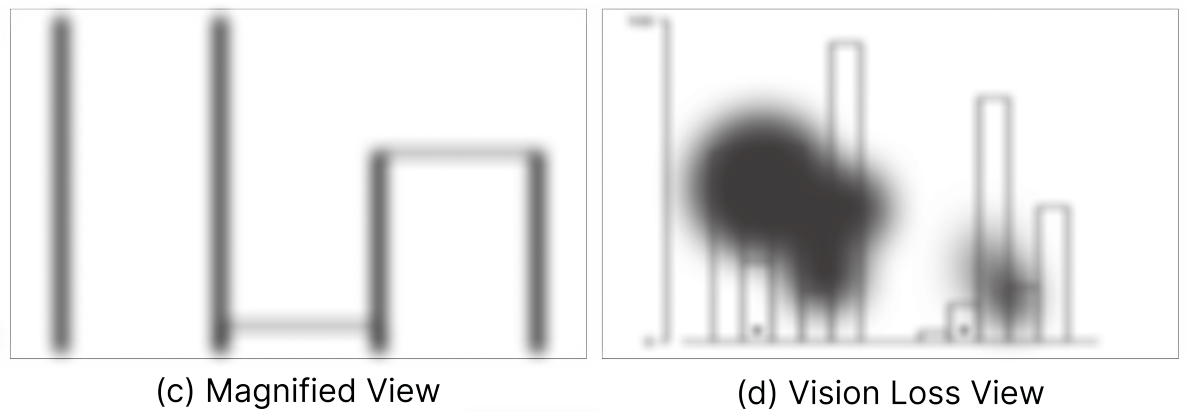

Towards Enhancing Low Vision Usability of Data Charts on Smartphones

IEEE VIS (TVCG) • September 2024

Yash Prakash, Pathan Aseef Khan, Akshay Kolgar Nayak, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

We present GraphLite, a mobile assistive system that makes data charts more usable for low-vision screen magnifier users. GraphLite transforms static, non-interactive charts into customizable, interactive views that preserve visual context under magnification. Users can selectively focus on key data points, personalize chart appearance, and reduce panning effort through simplified gestures.

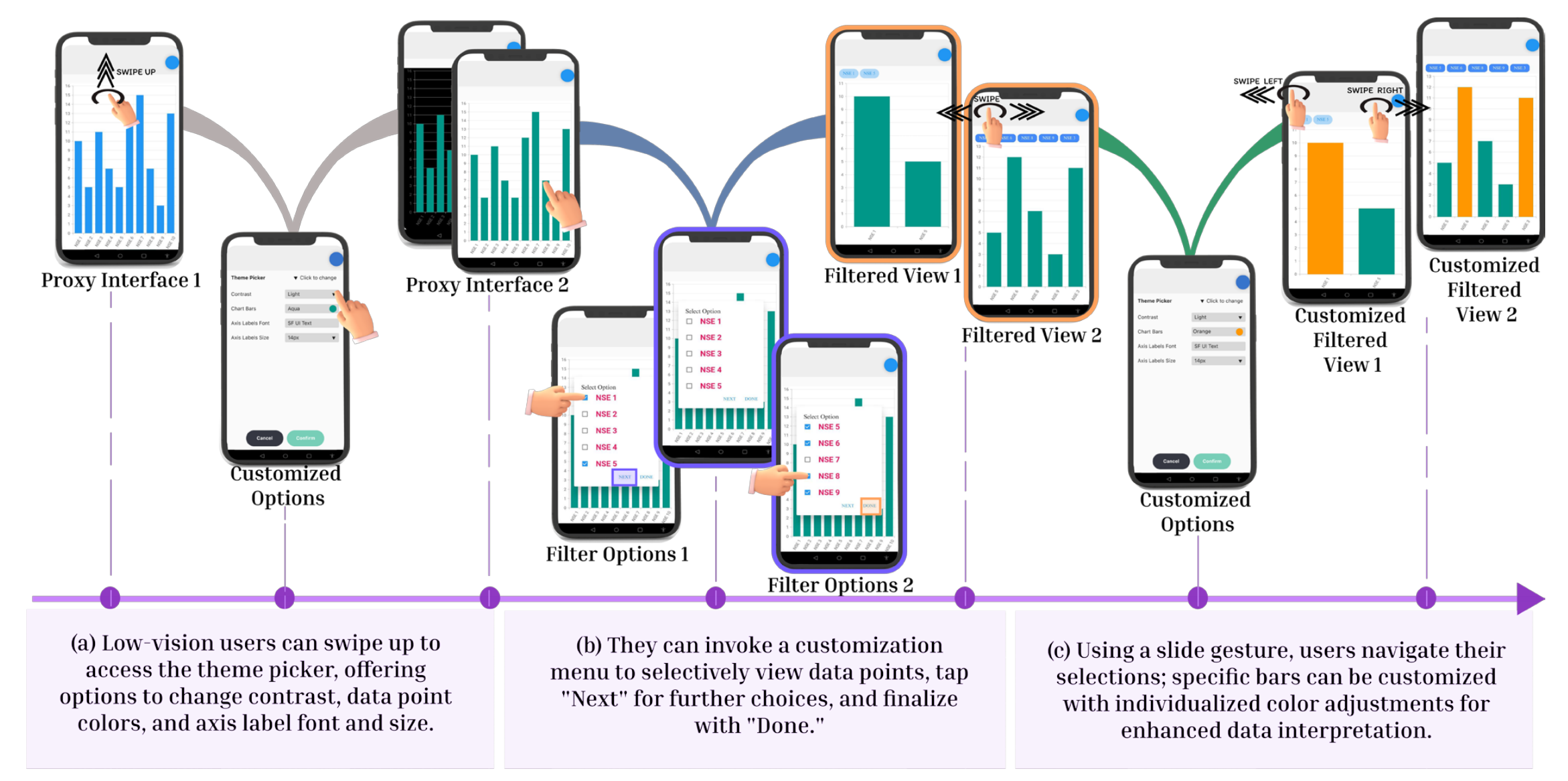

Assessing the Accessibility and Usability of Web Archives for Blind Users

TPDL • September 2024

Mohan Sunkara, Akshay Kolgar Nayak, Sandeep Kalari, Satwik Ram Kodandaram, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

Web archives preserve digital history for researchers and the public, yet their accessibility for blind users remains unexplored. This study provides the first comprehensive evaluation of five prominent platforms (Wayback Machine, UK Web Archive, Pandora, Trove, Archive.today) through automated analysis of 223 pages and a user study with 10 blind participants. Critical barriers emerged including missing image alternatives, inadequate ARIA labeling, and inaccessible date-selection widgets. Participants averaged 8.21 minutes and 129 shortcuts per task, with Archive.today least accessible and UK Web Archive most usable, informing actionable design recommendations for developers.

All in One Place: Ensuring Usable Access to Online Shopping Items for Blind Users

ACM EICS (PACMHCI) • June 2024

Yash Prakash, Akshay Kolgar Nayak, Mohan Sunkara, Sampath Jayarathna, Hae-Na Lee, Vikas Ashok

We present InstaFetch, a browser extension that transforms e-commerce accessibility for blind screen reader users by eliminating tedious navigation between product list and detail pages. InstaFetch provides a unified interface that consolidates descriptions, specifications, and reviews in one place using a custom Mask R-CNN model trained on 3,000 annotated webpages. Beyond information aggregation, it features natural language querying powered by LLaMA with Retrieval Augmented Generation, Chain-of-Thought, and ReAct prompting, enabling users to ask complex product questions and receive immediate contextual responses. In evaluations with 14 blind participants, InstaFetch significantly reduced interaction time, keyboard shortcuts, and cognitive workload while enabling exploration of substantially more products.